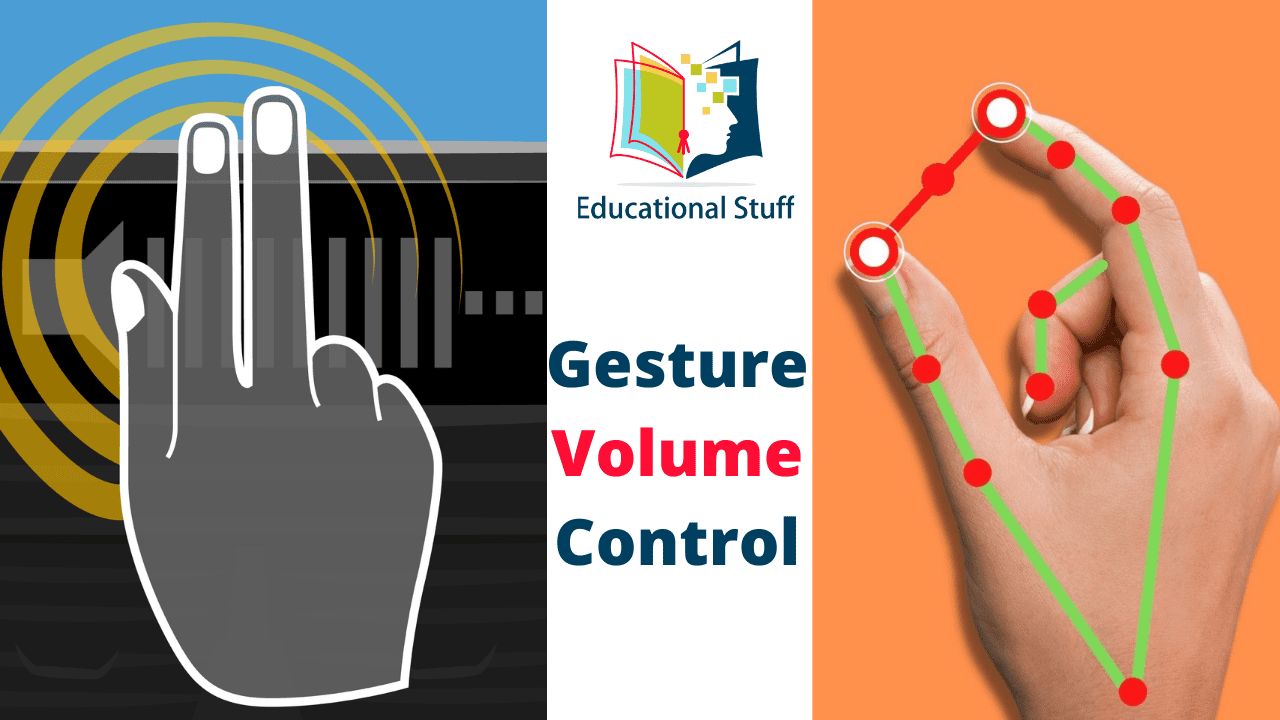

Gesture Volume Control Using OpenCV

We’ll learn how to adjust the volume of a computer with Gesture Control (Gesture Volume Control). We’ll start with hand tracking and then utilize hand landmarks to figure out how to alter the volume with a gesture of our hand. This project is module-based, which means we’ll be using a hand module that was already built and makes hand tracking a breeze.

What would be the first solution that comes to mind if I told you you wanted to do anything with hand motion recognition in Python: train a CNN, contours, or convexity hull? Sounds excellent and doable, however, when it comes to putting these approaches to use, the detection is poor and requires particular circumstances.

Gesture Volume Control

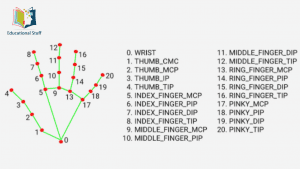

In this post, I’ll show you how to make two amazing projects with this library’s hands module. The hands’ module generates a localization of your hand based on 21 points. This implies that if you provide this module a hand image, it will return a 21-point vector with the locations of 21 key landmarks on your hand.

Regardless of the image you use as input, the points will be the same. This implies that point 4 will always be your thumb’s tip, and point 8 will always be your index finger’s tip. So, after you have the 21-point vector, the type of project you make is entirely up to you.

Features

- You may adjust the loudness of your computer based on your hand activity.

You’ll be able to track your hand in real-time.

#GestureVolumeControl #Volume Control Using OpenCV #Deeplearning #ComputerVision

Leave a Comment